Navigating Kubernetes: Features, Use Cases, and Best Practices

Using the right tools for container orchestration and management is essential. In recent years, Kubernetes has emerged as the leading open-source system for automating deployment, scaling, and management of containerized applications.

With its powerful features for clustering, load balancing, auto-scaling, and more, Kubernetes provides a robust platform for deploying containerized workloads in production.

However, with its many components and rapid evolution, the Kubernetes ecosystem can seem daunting to navigate at first. This article provides an in-depth guide to the key capabilities and use cases of Kubernetes, along with best practices for implementation.

We'll cover topics ranging from cluster architecture and deployment options to monitoring, security, and integration with public cloud platforms. Whether you're considering Kubernetes for the first time or looking to expand your skills, this guide aims to provide the understanding required to successfully leverage Kubernetes in your environment. Equipped with the insights provided here, you'll be prepared to navigate the Kubernetes landscape with confidence.

What is Kubernetes?

Kubernetes is an open-source container orchestration system for automating deployment, scaling, and management of containerized applications. Originally designed by Google, Kubernetes is now maintained by the Cloud Native Computing Foundation.

As a platform, Kubernetes provides you with a framework to run distributed systems resiliently. It handles scheduling onto nodes in a compute cluster and actively manages workloads to ensure that their state matches the users declared intentions.

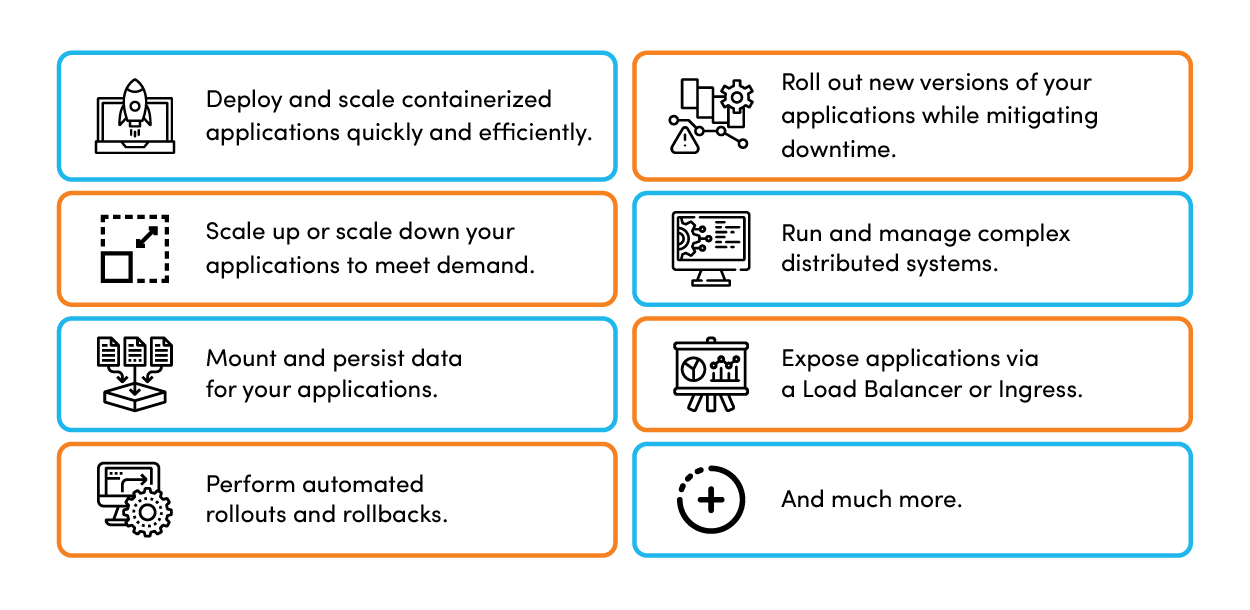

Using Kubernetes, you can:

- Deploy and scale containerized applications quickly and efficiently.

- Roll out new versions of your applications while mitigating downtime.

- Scale up or scale down your applications to meet demand.

- Run and manage complex distributed systems.

- Mount and persist data for your applications.

- Expose applications via a Load Balancer or Ingress.

- Perform automated rollouts and rollbacks.

- And much more.

Kubernetes provisions and manages computing, networking, and storage infrastructure on behalf of user workloads. It does this by automating the deployment, scaling, and management of containerized applications.

In summary, Kubernetes is a powerful open-source project that allows you to automate the deployment, scaling, and management of containerized applications. By providing a platform to run distributed systems resiliently, it has become the de facto standard for container orchestration.

Key Components and Architecture of a Kubernetes Cluster

To deploy a Kubernetes cluster, you will need to configure and set up the following core components:

Master Nodes

Master nodes act as controllers that manage the Kubernetes cluster. They schedule workloads, monitor resource usage, maintain cluster state, provide APIs to manage the cluster, and orchestrate communication between nodes. The master nodes run several main control plane components:

- etcd: A distributed key-value store that stores cluster data.

- API Server: The front end for the Kubernetes API. It authenticates and authorizes API requests, validates and configures data, and allows the Kubernetes API to be accessed.

- Scheduler: Schedules pods to run on selected nodes in the cluster based on available resources and other constraints.

- Controller Manager: Runs controllers that handle node failures, pod replication, service endpoints, etc.

- Cloud-Controller-Manager: For cloud providers, controls cloud-specific resources like load balancers, storage classes, and networking routes.

Worker Nodes

Worker nodes run your applications and cloud workloads. Also known as minions, they communicate with the master components to run pods and provide the runtime environment for Kubernetes. Worker nodes require a container runtime (like Docker), kubelet (which communicates with the API server), and kube-proxy (which maintains network rules on nodes).

By understanding the architecture and components of a Kubernetes cluster, you can properly configure, deploy, and manage your own Kubernetes environment. Following best practices around master and worker node separation, redundancy, and security hardening will ensure you have a stable platform to run your containerized workloads.

Kubernetes Use Cases and Deployment Models

Microservices Architecture

Kubernetes is ideal for deploying and managing microservices architectures. Microservices are independent, modular components that work together to create a larger application. Kubernetes provides an excellent platform for deploying these discrete components and managing their lifecycles. Each microservice can be deployed in its own pod, and Kubernetes handles starting, stopping, scaling, and updating each pod.

Continuous Integration and Continuous Delivery

Kubernetes enables a robust continuous integration and continuous delivery (CI/CD) workflow. When developers commit code changes, Kubernetes can automatically build, test, and deploy the new code. Kubernetes’ declarative configuration and automation features make it easy to recreate development environments, deploy the latest version of an application, and roll back quickly if needed.

Hybrid and Multi-Cloud Deployments

Kubernetes supports hybrid and multi-cloud deployments, enabling you to deploy applications on a combination of on-premises infrastructure, public clouds, and private clouds. The Kubernetes API is consistent across environments, so you can deploy clusters on multiple platforms and your applications and tools work the same in each environment. Kubernetes’ portability allows you to avoid vendor lock-in.

Serverless Computing

Kubernetes provides a platform for building serverless computing applications and systems. In serverless computing, Kubernetes automatically allocates resources based on demand and scales resources up and down as needed. Developers can focus on writing application code and let Kubernetes handle resource provisioning and management.

Deployment Strategies

Kubernetes supports several deployment strategies to suit different application needs:

Recreate: Shuts down the current version of the app and recreates the pods with the new version. Useful for non-critical apps during development.

Rolling Update: Gradually replaces the current pods with new pods. Useful for critical production apps where downtime needs to be minimized.

Blue-Green: Spins up a complete new environment for the new version of the app. Useful for apps where the new version needs to be validated before going live.

Canary: Gradually rolls out the new version to a subset of users. Useful for validating the new version with real-world usage before fully deploying.

Following these Kubernetes use cases and deployment strategies will allow you to fully optimize this container orchestration platform for your software applications and systems. With the proper configurations and management in place, Kubernetes can greatly streamline your deployment workflows.

Best Practices for Kubernetes in Production

Carefully Plan Your Cluster Architecture

When setting up Kubernetes for production, it is crucial to carefully plan your cluster architecture. Determine how many nodes and clusters you need based on your workload requirements. Also consider if you need a multi-cluster architecture to handle different environments or scale. Define network policies and security controls to properly isolate clusters. Planning your architecture in detail before deploying will help ensure your Kubernetes environment is stable, scalable, and secure.

Use Kubernetes Native Tools

Kubernetes offers native tools for logging, monitoring, security, and more that are built specifically for Kubernetes environments. Using these native tools, like the Kubernetes dashboard, kube-state-metrics, and kube-prometheus, will provide deeper insights into your clusters and workloads. The native tools are maintained by the Kubernetes project and updated with each release, so you can ensure compatibility and stability.

Automate as Much as Possible

In production, aim to automate as many tasks as possible. Use tools like Helm to automate application deployments. Create Continuous Integration (CI) pipelines to automatically build and deploy container images. Leverage Kubernetes CronJobs to automate scheduled tasks. The more you can automate, the less chance of human error and the more consistent your environment will be. However, be careful not to over-automate, as some tasks still require human oversight and governance.

Practice the Principle of Least Privilege

Following the principle of least privilege means only providing users and applications with the minimum permissions needed. For Kubernetes, this means using role-based access controls to grant specific roles to users and service accounts. Start with limited permissions and add more access as needed. This limits the potential damage from compromised accounts or workloads. Review permissions regularly and make adjustments to ensure the principle of least privilege is maintained.

Monitor Your Clusters and Workloads

Comprehensive monitoring is essential for running Kubernetes in production. Monitor your Kubernetes control plane components, nodes, and workloads to detect issues early. Review CPU, memory, storage, and network usage for signs your clusters are over- or under-provisioned. Monitor application metrics to ensure workloads are performing as expected. Set up alerts to notify you of anomalies so you can take action quickly. Robust monitoring gives you visibility into the health and performance of your Kubernetes environment.

Conclusion

In closing, Kubernetes provides a robust container orchestration platform that helps you manage containerized applications across private, public, and hybrid clouds. Take time to understand the key components, features, and architecture so you can take full advantage of its capabilities. Evaluate your application needs and infrastructure to determine where Kubernetes will deliver the most value. Start small, learn through hands-on experience, and expand to more advanced use cases over time.

With the right strategy, Kubernetes can help you achieve greater scale, resiliency, and velocity on your cloud-native journey. Following best practices around security, monitoring, and deployment will enable you to operate Kubernetes efficiently in production.

The Kubernetes ecosystem is vast - navigate it wisely by focusing first on the core tenets. Mastering Kubernetes fundamentals will provide a solid foundation as you expand your containerized footprint.

Seeking to implement a Kubernetes ecosystem by utilizing a framework to help you run distributed systems resiliently? Contact us for a free consultation where our DevOps experts will assist you with your needs.